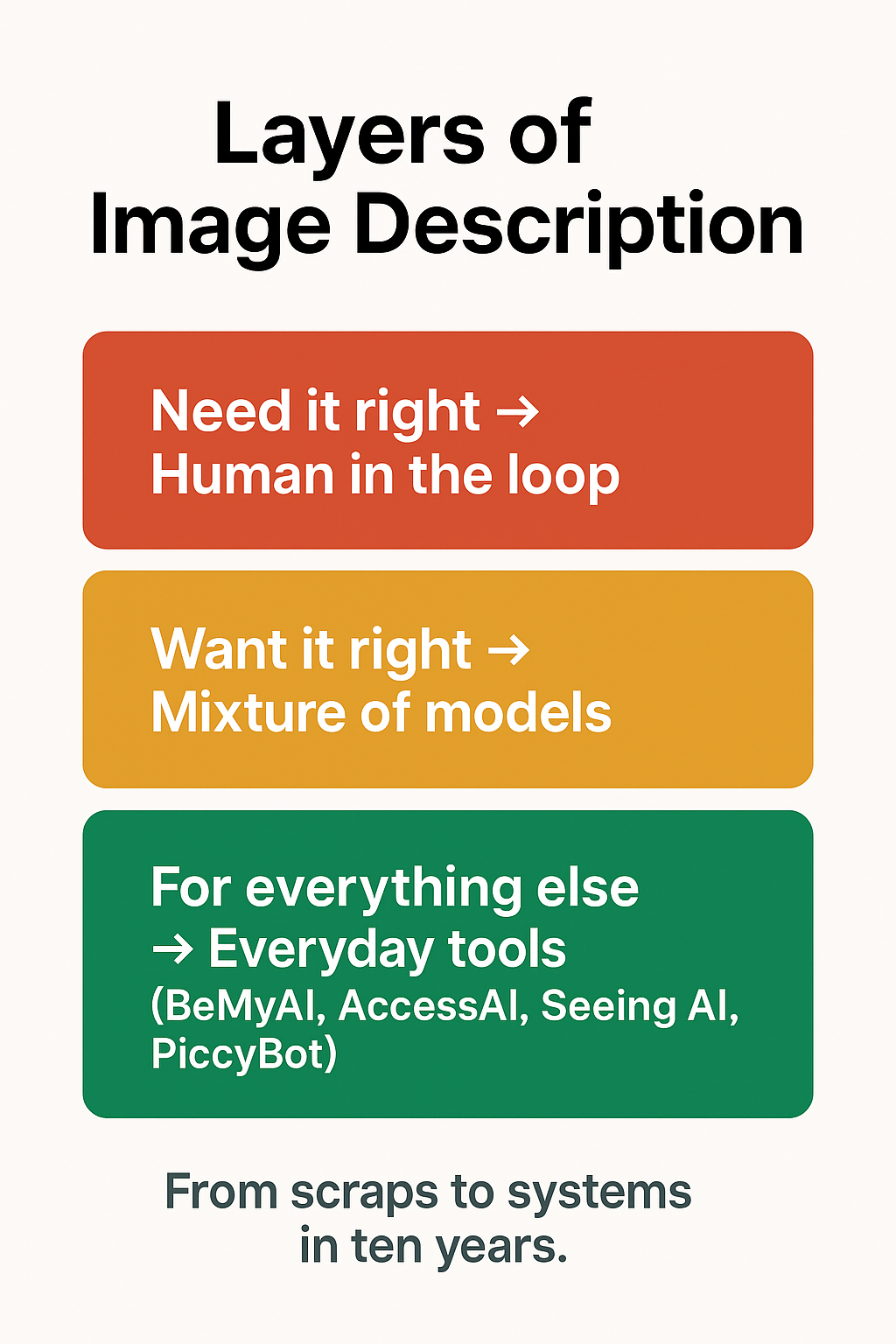

I want to share a simple mental model I’ve been using to think about image description tools.

This isn’t about which app is “best”.

It’s about how much reliability you actually need in the moment.

The model has three layers.

1. “Need it right” → Human in the loop

This is the top layer, and it’s intentionally blunt.

If a description has real consequences — safety, money, health, legal decisions, or anything where a mistake genuinely matters — a human should be involved.

Examples include:

- Reading medication packaging

- Checking whether food is safe

- Confirming something important in a document or photograph

- Any situation where you would already ask another person if AI didn’t exist

No AI system today can guarantee correctness. Even very good ones can be confidently wrong. When the cost of error is high, humans still matter.

2. “Want it right” → Mixture of models

This is the middle layer, and it’s where things get interesting.

Instead of trusting a single AI model to describe an image, some approaches use multiple models independently and then compare the results. Anything claimed by only one model is treated with suspicion. What remains is the overlap — the things several models agree on.

This doesn’t make the result perfect, but it does reduce hallucinations and over-confident guesses.

Think of it like asking three people what’s in a photo, then writing down only what they all agree on.

This layer works well when:

- You want higher confidence than a single tool provides

- You’re exploring or learning, not making a critical decision

- You want fewer “creative flourishes” and more boring accuracy

3. “For everything else” → Everyday tools

This is where most image descriptions live day to day.

Tools like Be My AI, Access AI, Seeing AI, PiccyBot, and similar are incredibly useful for:

- Understanding photos shared socially

- Getting a quick sense of surroundings

- Browsing posts, memes, product images

- Reducing friction in everyday life

They’re fast, accessible, and usually good enough. The key is knowing when good enough really is good enough — and when it isn’t.

Why this framing matters

We’ve gone from scraps to systems in about ten years. That’s astonishing.

The danger isn’t that AI is “bad”. It’s that users get pushed into thinking there’s only one correct way to do image description. There isn’t.

Different situations demand different levels of certainty. A layered approach lets us keep the speed and independence AI offers without pretending it’s infallible.

For me, this model answers a very practical question:

How much trust do I need to place in this description right now?

Once you ask that, the right tool usually becomes obvious.

I’m interested in how others make these judgment calls in practice — when AI alone is enough, when it’s worth double-checking, and when another human belongs in the loop.